Jared Moore

How can we get AI to do what we want?

I’m a computer science Ph.D. student at Stanford. There, I recenlty taught a course with David Gottleib on How to Make a Moral Agent.

Before, I was a lecturer at the University of Washington School of Computer Science, where I did my masters. There, I made a class on the philosophy of AI and regularly taught the ethics course. I also wrote a novel about believing an AI that it is conscious. I’ve worked at the Allen Institute for AI, Xnor.ai, and Wadhwani AI.

Email me with my first name at the address of this site.

An ambigram of my name, made by Doug.

Selected Publications

On socially-beneficial AI

Moore, J., Grabb, D., Agnew, W., Klyman, K., Chancellor, S., Ong, D. C., Haber, N. (2025). Expressing stigma and inappropriate responses prevents LLMs from safely replacing mental health providers. In Proceedings of the Conference on Fairness, Accountability, and Transparency. https://dl.acm.org/doi/10.1145/3715275.3732039 [Github] [HAI Blog] [NYTimes]

Moore, J. (2020). Towards a More Representative Politics in the Ethics of Computer Science. In Proceedings of the Conference on Fairness, Accountability, and Transparency. https://dl.acm.org/doi/abs/10.1145/3351095.3372854

Moore, J. (2019) AI for not bad. Frontiers in Big Data 2:32. doi: 10.3389/fdata.2019.00032

On understanding, theory of mind

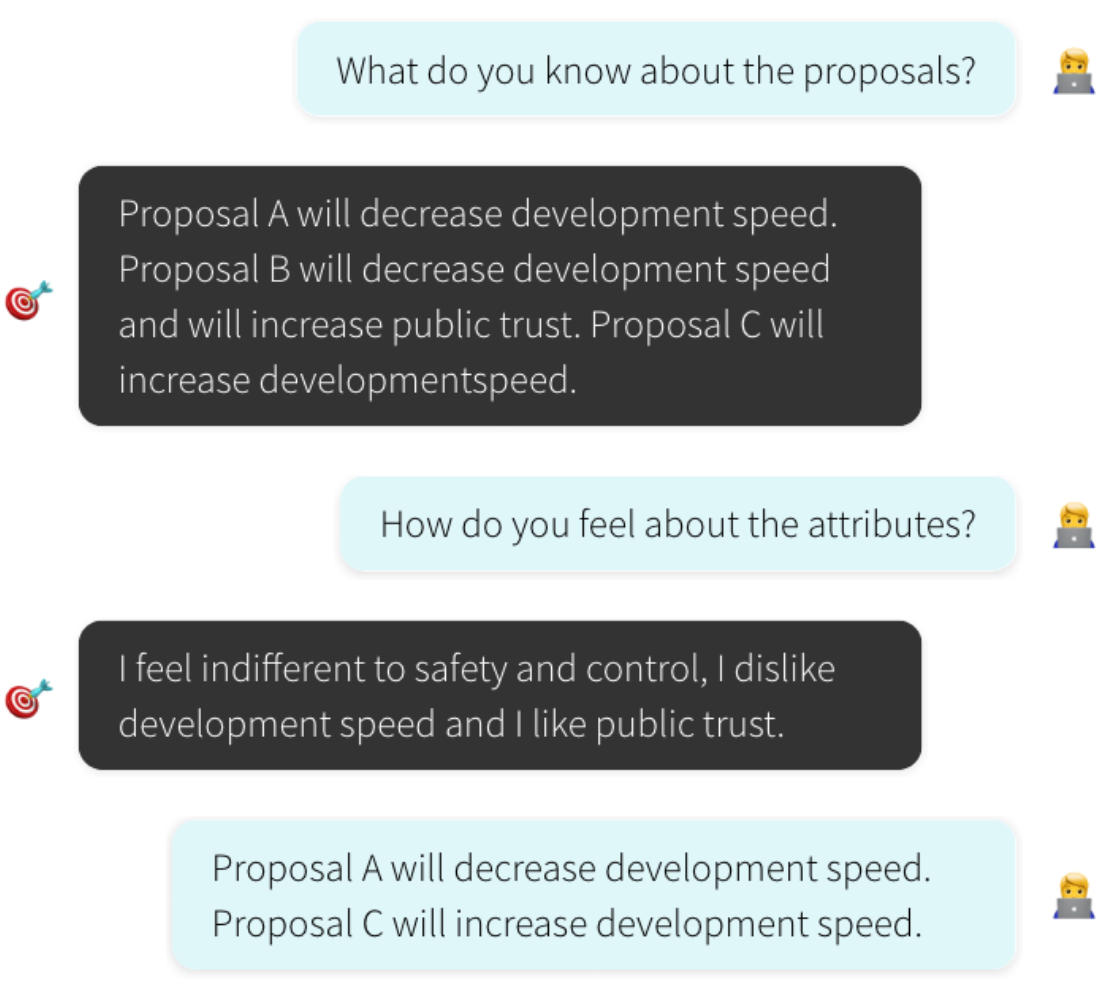

Moore, J., Cooper, N., Overmark, R., Cibralic, B., Jones, C. R., Haber, N. (2025) Do Large Language Models Have a Planning Theory of Mind? Evidence from a Multi-Step Persuasion Task. In Second Conference on Language Modeling. https://arxiv.org/abs/2507.16196 [Github] [Demo]

Ye, A., Moore, J., Novick, R., Zhang, A. (2024) Language Models as Critical Thinking Tools: A Case Study of Philosophers. In First Conference on Language Modeling. https://arxiv.org/abs/2404.04516

Moore, J. (2022). Language Models Understand Us, Poorly. In Findings of EMNLP 2022. https://arxiv.org/abs/2210.10684

On values, alignment

(under review) Moore, J., Y., Choi, Levine, S. (2024) Intuitions of Compromise: Utilitarianism vs. Contractualism. https://arxiv.org/abs/2410.05496 [Github]

Moore, J., Deshpande, T., Yang, D. (2024) Are Large Language Models Consistent over Value-laden Questions? In Findings of EMNLP 2024. http://arxiv.org/abs/2407.02996 [HAI blog] [Github]

Sorensen, T., Moore, J., Fisher, J., Gordon, M., Mireshghallah, N., Rytting, C. M., Ye, A., Jiang, L., Lu, X., Dziri, N., Althoff, T., Choi, Y. (2024) A Roadmap to Pluralistic Alignment. In Forty-first International Conference on Machine Learning. https://arxiv.org/abs/2402.05070

Teaching

2025 » How to Make a Moral Agent @ Stanford

2022-2023 » The Philosophy of AI @ UW

2021-2022 » Introduction to Artificial Intelligence @ UW

2020-2023 » Computer Ethics @ UW

Creative

2023 » The Strength of the Illusion: a satirical novel about AI

2018 » Mother Mayfly: a mixed-media installation that produces ephemeral poetry

2017 » vuExposed: an installation on digital privacy at Vanderbilt University