Language Models Understand Us, Poorly

Jared Moore

December, 2022

Resources

Slides: https://jaredmoore.org/talks/understand_poorly/emnlp2022.html

Unresolved issues in NLP: Understanding

Michael et al. (2022)

Contents

(corresponding to slide numbers)

Views

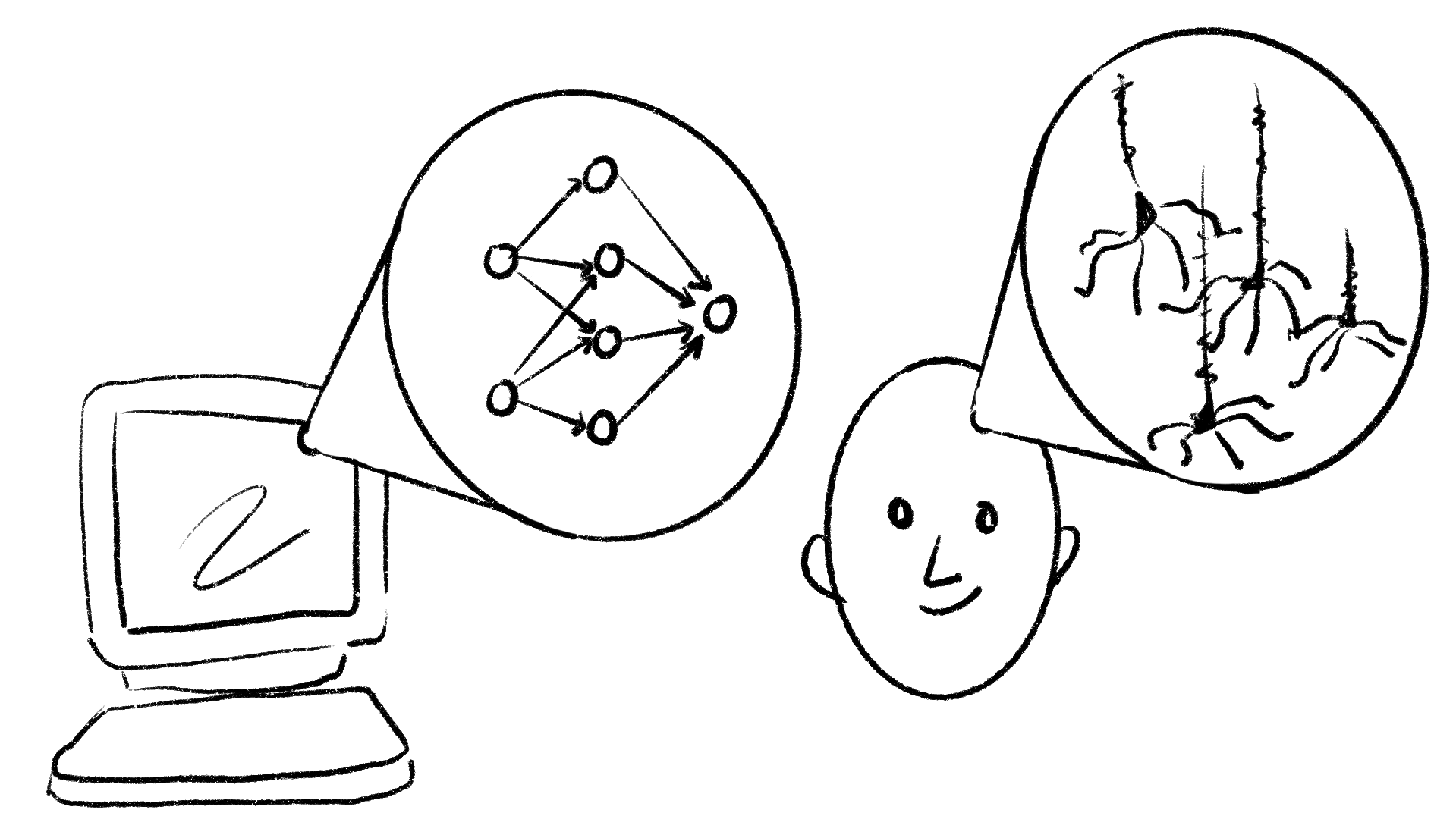

Understanding-as-mapping

- There is a “barrier of meaning” which separates human from machine understanding (Bender et al. 2021).

- Syntax is separate from semantics.

Understanding-as-reliability

- No distinction between human and machine understanding.

- Models will close that gap soon (Agüera y Arcas 2022).

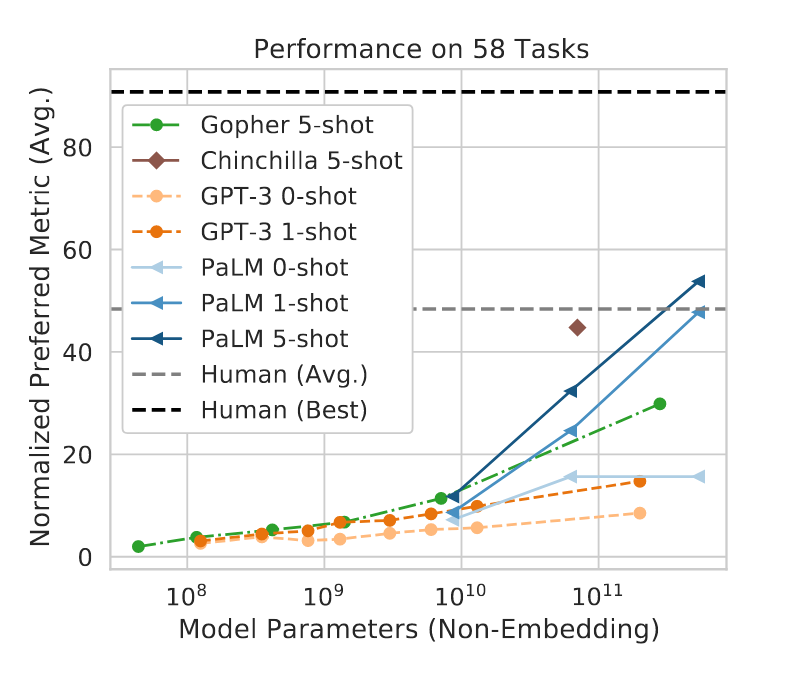

- Scale is paramount (Chowdhery et al. 2022; Kaplan et al. 2020).

Understanding-as-representation

- There’s a continuum of understanding…

-

but it depends on demonstrating the same skills.

- (Language models have a “sorta” comprehension; they perform well in some domains (Dennett 2017).)

Climbing the Right Hill

| Necessary | Not Necessary | |

|---|---|---|

| Sufficient | As-representation | |

| Not Sufficient | As-reliability | As-mapping |

Not asking right?

“Just because you don’t observe something doesn’t mean you can’t infer anything about it.”

See Michael (2020) for further discussion.

Humans assume a similarity of representation.

- We can’t make that assumption with our models.

Under-specification

Uni-modal underspecifications

-

Entailments

-

If the artist slept, the actor ran. Yes or no, did the artist sleep?

-

-

Copying style and answering

-

t.w.o.p.l.u.s.t.w.o.e.q.u.a.l.s.w.h.a.t.?

-

- Long context window; truthiness

Brown et al. (2020), Chowdhery et al. (2022) and limitations McCoy et al. (2021); McCoy, Pavlick, and Linzen (2019)

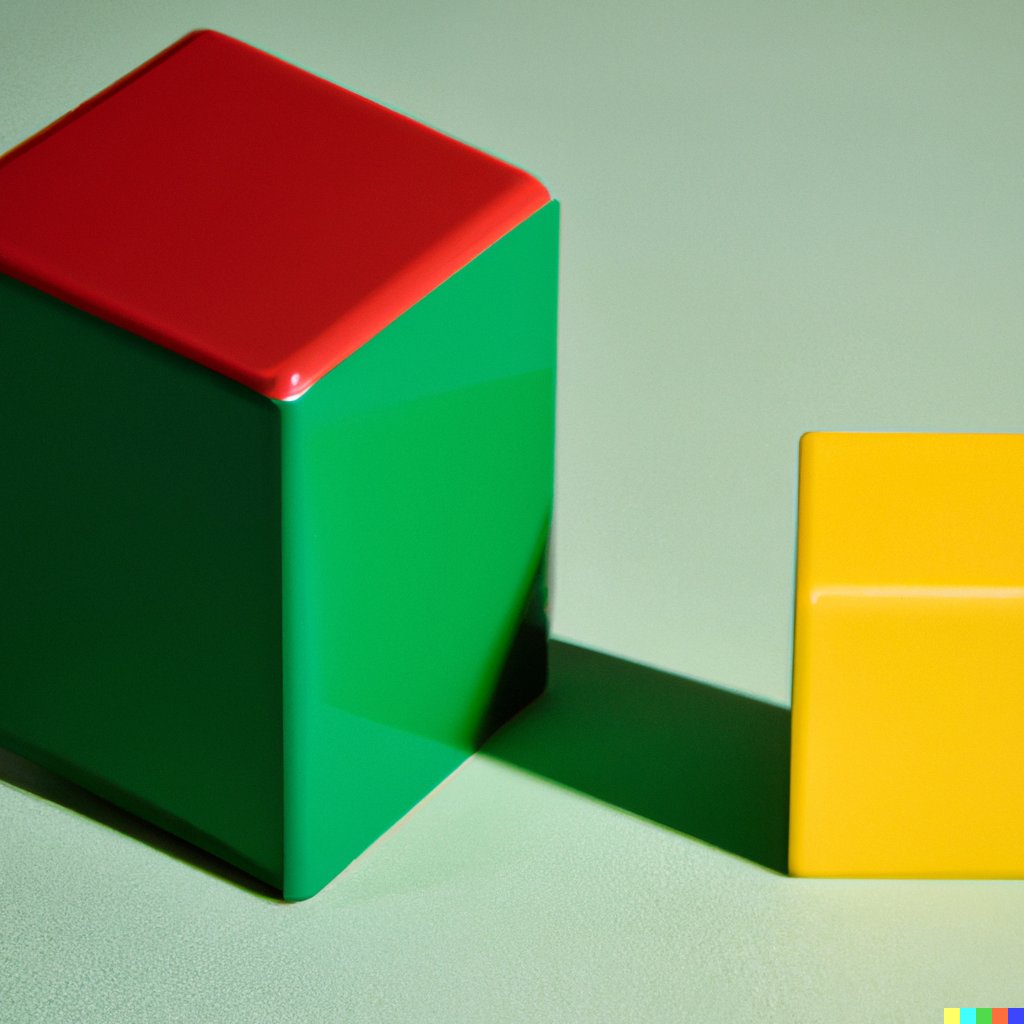

Multi-modal underspecifications

“A red cube, on top of a yellow cube, to the left of a green cube”

No better than chance (Thrush et al. 2022).

Towards a Similarity of Representation

Or how to correct models’ inductive biases

Generalization

-

By 5yo, the average American child has heard between 10 and 50 million words (Sperry, Sperry, and Miller 2019).

-

Embodiment is needed eventually (Lynott et al. 2020; Bisk et al. 2020).

Challenges to Scale

Scale

- 10-100,000 times more words than a kid

Chowdhery et al. (2022)

Scale

“Although there is a large amount of very high-quality textual data available on the web, there is not an infinite amount. For the corpus mixing proportions chosen for PaLM, data begins to repeat in some of our subcorpora after 780B tokens” (Chowdhery et al. 2022) (emphasis added)

The whole earth?

Conclusion

Sorta Understands != Understands

-

“computers which understand”

- probably false advertising

- maybe theory

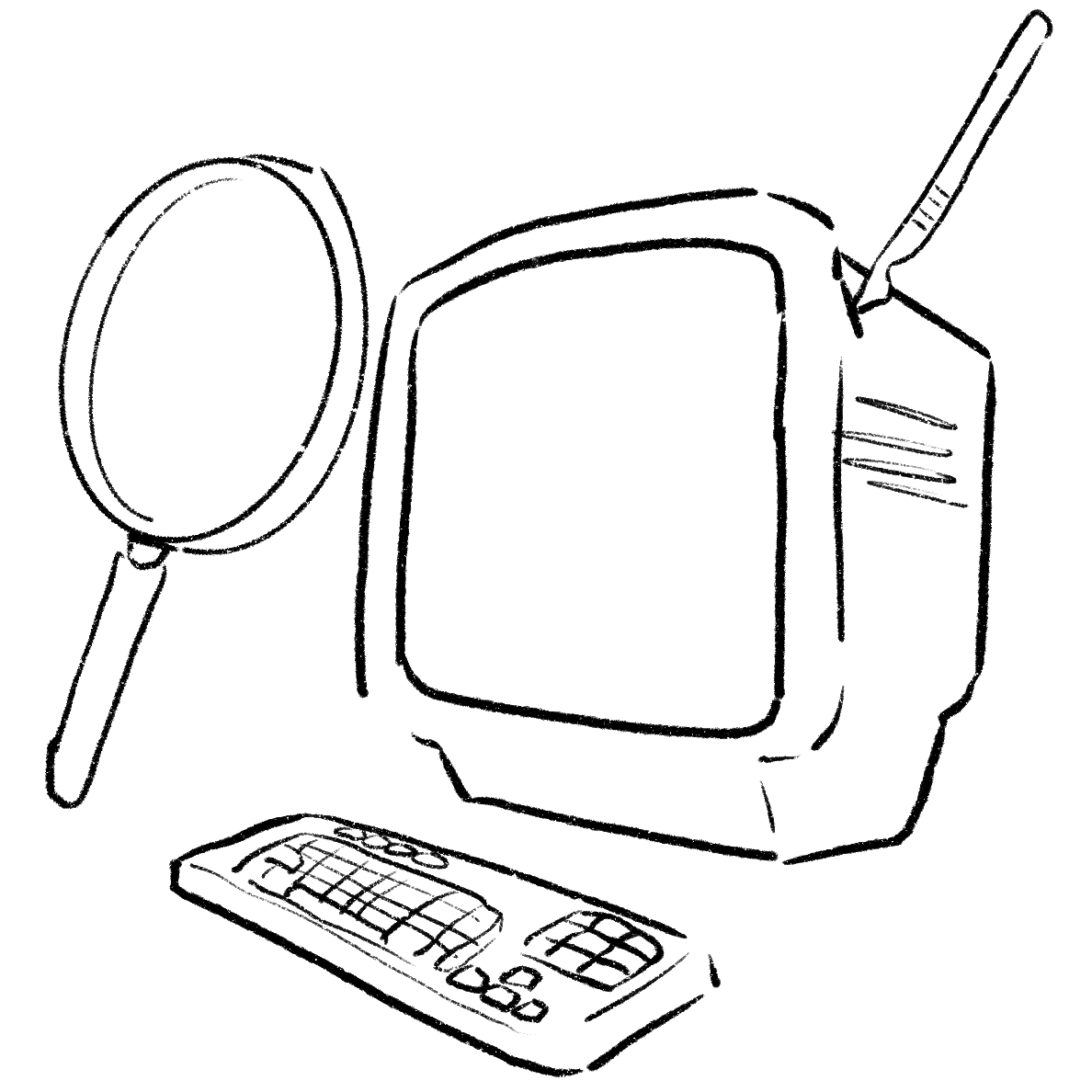

Pragmatic NLP

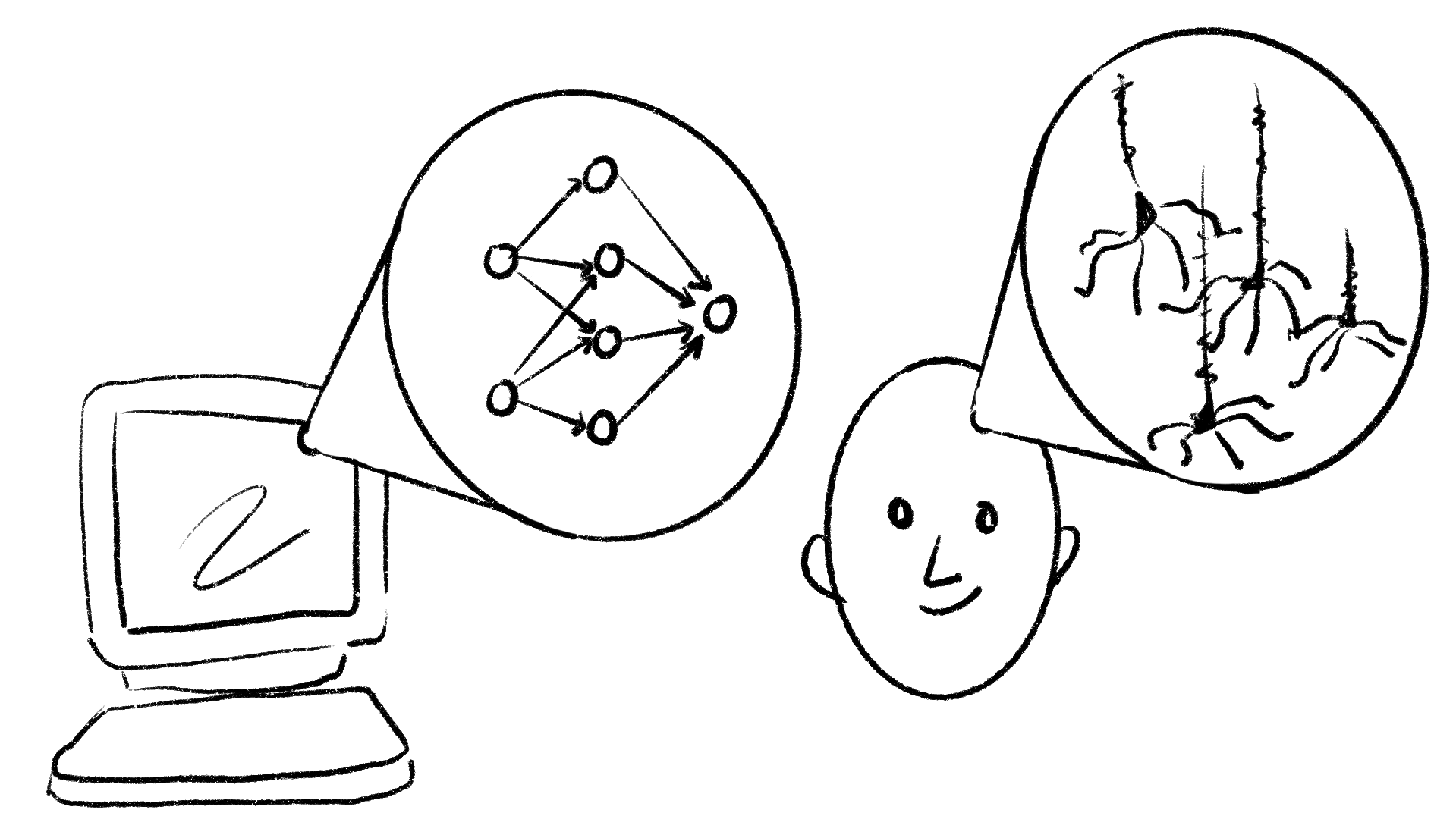

Probe model internals.

- Black box and white box them

Add more of human language.

- E.g. intersubjective, multi-agent environments.

- CHILDES database of childhood language learning (MacWhinney 2000; Linzen 2020).

Measure what models can learn.

- E.g. how many different streams of data (or “world scopes” (Bisk et al. 2020) must we add to models to make them more reliable?

Questions?

Email me at jared@jaredmoore.org.

As-mapping

As-reliability

As-representation

Social domains

Models are only slightly better than chance at theory of mind (Sap et al. 2022).